LLM models like GPT-4o are great when we want to generate unstructured data like essay, or poem. We can simply send the prompt, and retrieve the text from LLM and display to the user, or store in the file or SQL database.

PROBLEM

When I developed a few LLM-based applications, I discovered that I need more structured data to build something more useful than just a chatbot. But in more advanced applications you need JSON document to be returned from LLM.

In this article I will explain 2 methods of extracting JSON from LLM:

- Prompt Engineering

- JSON Schema – a new feature in Azure OpenAI

I will also compare the accuracy of both approaches to see which of them is more reliable and we’ll make 2 eperiments to confirm which approach should be used in production-ready .NET apps.

APPROACH 1: JSON output with Prompt Engineering

You can try to send LLM a prompt:

PROMPT: based on the data: {DATA} create JSON document that has format {FORMAT}This may work to some extent. It’s not perfect solution because of the following reasos:

- The response contains additional strings like: json, new line character and orher unuseful text. To further process the result from LLM we must first exclude those characters and then deserialize the output. This makes our C# code little unclear and long.

var prompt = $"Extract information from the data: {_cityData}. Your response must be in the following JSON format: {_cityFormat}";

var json = await _chatService.GetJsonFromPrompt(prompt);

cleanJson = json.Replace("json\n", "").Trim();

cleanJson = cleanJson.Replace("```", "").Trim();

cleanJson = cleanJson.Replace("\n", "").Trim();

var city = JsonSerializer.Deserialize<City>(cleanJson);2. Because of the additional characters in the response, we use additional tokens – for scalable applications we should keep it in mind.

3. Accuracy – when you run this code once or two, you can say: “What a great feature, I don’t care about the additional characters, I can remove them and it’s perfect!”. No. It isn’t. Why?

EXPERIMENT – JSON with Prompt Engineering

Let me show you the result of my experiment. I asked the LLM 100 times to generate valid JSON that I deserialized to C# object. Here is the code:

[Theory]

[InlineData(100)]

public async Task GetDataFormPrompt(int iterations)

{

int success = 0;

int fail = 0;

var resultPath = "result.txt";

if (!File.Exists(resultPath))

{

File.Create(resultPath).Close();

}

for (int i = 1; i <= iterations; i++)

{

var cleanJson = "";

try

{

var prompt = $"Extract information from the data: {_cityData}. Your response must be in the following JSON format: {_cityFormat}";

var json = await _chatService.GetJsonFromPrompt(prompt);

cleanJson = json.Replace("json\n", "").Trim();

cleanJson = cleanJson.Replace("```", "").Trim();

cleanJson = cleanJson.Replace("\n", "").Trim();

var city = JsonSerializer.Deserialize<City>(cleanJson);

if(city.Name != "Washington, D.C.")

{

throw new Exception();

}

var successText = $"Success, {cleanJson}";

File.AppendAllText(resultPath, successText + "\n");

success++;

} catch (Exception e)

{

var failText = $"Fail, {cleanJson}";

File.AppendAllText(resultPath, failText + "\n");

fail++;

}

}

var summaryText = $"Success:{success}/{iterations}";

File.AppendAllText(resultPath, summaryText + "\n");

Assert.Equal(iterations, success);

}EXPERIMENT RESULTS

I run the same code 100 times and checked the accuracy. Here are the results: only 86 times I was able to parse the JSON document generated by LLM. It means the accuracy of propt engineering JSON is only 86%. Not enough for production-ready application. Do you like to add try-catch statement to check if LLM failed to generate JSON and then repeat the call to LLM if necessary? Based on my experiment 14% of calls will end up with exception.

JSON WITH PROMPT ENGINEERING – CODE

Let’s say we want to exract all the necessary entities from Wikipedia artile about Washington, D. C. Let’s assume we have a model class that we want to fill with the values:

public class City

{

// Properties

public string Name { get; set; }

public string Country { get; set; }

public int Population { get; set; }

public double Area { get; set; } // in square kilometers

public string Region { get; set; }

public (double Latitude, double Longitude) Coordinates { get; set; }

public string TimeZone { get; set; }

public List<string> MajorLandmarks { get; set; }

public string Economy { get; set; }

public string Climate { get; set; }

public List<string> Transportation { get; set; }

public string History { get; set; }

public string Government { get; set; }

public string Language { get; set; }

public string Infrastructure { get; set; }

// Constructor

public City()

{

MajorLandmarks = new List<string>();

Transportation = new List<string>();

}

}APPROACH 2: JSON SCHEMA

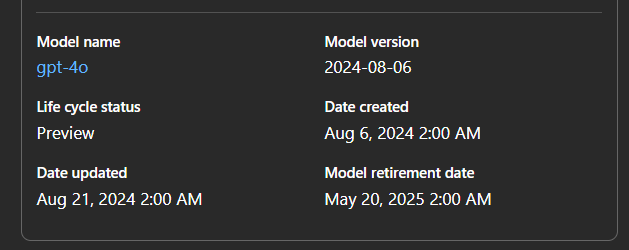

There is also a second, more modern technique called JSON Schema – it’s available in the newest GPT-4o model published in Azure OpenAI in August 2024. Here are some useful details about this model that I’m using in Azure AI Foundry – OpenAI service:

Here is the code:

public async Task<string> GetDataUsingJsonSchema(string input)

{

var format = ChatResponseFormat.CreateJsonSchemaFormat(

jsonSchemaFormatName: "city_result",

jsonSchema: BinaryData.FromString("""

{

"type": "object",

"properties": {

"Name": { "type": "string" },

"Country": { "type": "string" },

"Population": { "type": "integer" },

"Area": { "type": "number" },

"Region": { "type": "string" },

"Coordinates": {

"type": "object",

"properties": {

"Latitude": { "type": "number" },

"Longitude": { "type": "number" }

},

"required": ["Latitude", "Longitude"],

"additionalProperties": false

},

"TimeZone": { "type": "string" },

"MajorLandmarks": {

"type": "array",

"items": { "type": "string" }

},

"Economy": { "type": "string" },

"Climate": { "type": "string" },

"Transportation": {

"type": "array",

"items": { "type": "string" }

},

"History": { "type": "string" },

"Government": { "type": "string" },

"Language": { "type": "string" },

"Infrastructure": { "type": "string" }

},

"additionalProperties": false,

"required": [

"Name",

"Country",

"Population",

"Area",

"Region",

"Coordinates",

"TimeZone",

"MajorLandmarks",

"Economy",

"Climate",

"Transportation",

"History",

"Government",

"Language",

"Infrastructure"

]

}

"""),

jsonSchemaIsStrict: true);

#pragma warning disable SKEXP0010

var executionSettings = new OpenAIPromptExecutionSettings()

{

ResponseFormat = format,

Temperature = 0,

};

#pragma warning restore SKEXP0010

var httpClient = new HttpClient

{

Timeout = TimeSpan.FromSeconds(300) //300 seconds

};

var completionService = _kernel.GetRequiredService<IChatCompletionService>();

var chatHistory = new ChatHistory();

chatHistory.AddUserMessage(input);

try

{

var chatResponse = await completionService.GetChatMessageContentsAsync(chatHistory, executionSettings);

var json = chatResponse[0].Content;

return json;

} catch (Exception e)

{

//NOTE: check exceptions

}

return "";

}How does it work? First we need to specify the format of our response. It must match the model class properties. Remember about additional usings. All the usings required by Semantic Kernel to use JSON Schema are:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using OpenAI.Chat;Now you can see that our code become more complex because you need to add entire JSON schema for your class in the code. But now we need to discuss more important thing – accuracy. I repeated the same experiment for my new code. This is the code:

[Theory]

[InlineData(100)]

public async Task GetUsingFormJsonSchema(int iterations)

{

int success = 0;

int fail = 0;

var resultPath = "result-json-schema.txt";

if (!File.Exists(resultPath))

{

File.Create(resultPath).Close();

}

for (int i = 1; i <= iterations; i++)

{

var json = "";

try

{

var prompt = $"Extract information from the data: {_cityData}.";

json = await _chatService.GetDataUsingJsonSchema(prompt);

var city = JsonSerializer.Deserialize<City>(json);

if (city.Name != "Washington, D.C.")

{

throw new Exception();

}

var successText = $"Success, {json}";

File.AppendAllText(resultPath, successText + "\n");

success++;

}

catch (Exception e)

{

var failText = $"Fail, {json}";

File.AppendAllText(resultPath, failText + "\n");

fail++;

}

}

var summaryText = $"Success:{success}/{iterations}";

File.AppendAllText(resultPath, summaryText + "\n");

Assert.Equal(iterations, success);

}

}EXPERIMENT RESULT: JSON SCHEMA

What’s the accuracy? 100/100 which means JSON Schema feature guarantees 100% accuracy without any need to retry LLM call. And this is the main benefit of using this method in your code. In terms of reliability JSON schema is much better than prompt engineering and function calling.

CONCLUSION AND MODE INFORMATION

I hope you liked this article. I will be happy if you share it on Linkedin so more C# developers can benefit from JSON Schema with Azure OpenAI GPT-4o and Semantic Kernel.

If you want to learn more about structured output in C# and Semantic Kernel, join Microsoft Reactor stream where Pamela Fox and I discuss the tate-of-the-art techniques to get the reliable JSON results from LLM. The stream is available HERE